Visual Question Answering (VQA) is transforming how machines understand and interact with visual data. At the intersection of computer vision and natural language processing, VQA systems like the Llava Model provide answers to queries about visual content, combining image recognition with contextual understanding. This blog explores the cutting-edge Llava Model and its integration with ezML, offering a comprehensive guide to implementing a VQA API in various applications.

Understanding the Llava Model in VQA

At the cutting edge of Visual Question Answering (VQA) technology lies the Llava Model, a remarkable embodiment of the latest advancements in AI. This model isn't just about recognizing images; it's about understanding them in context and responding to queries about them with nuanced accuracy.

Core Strengths of the Llava Model:

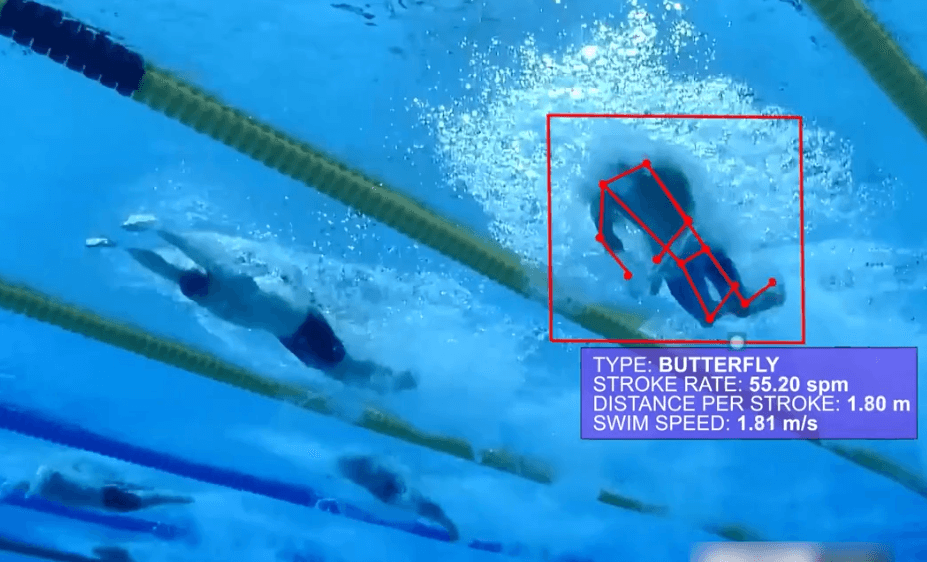

Complex Scene Interpretation: Unlike traditional image recognition models, the Llava Model is adept at dissecting complex visual scenes. It doesn’t just see an image; it analyses the intricate details, identifying and understanding the relationships and interactions within it.

Precision in Answering Queries: The Llava Model is engineered to handle a diverse range of queries with precision.

Advanced Integration of Vision and Language: At the heart of the Llava Model's effectiveness is its seamless integration of computer vision and natural language processing technologies.

Implementing Llava as a VQA API on ezML

ezML simplifies integrating the Llava Model into your systems, making it accessible even to those with limited technical expertise. Here's how to get started:

Integrating Llava with ezML:

Account Setup: Create an account on ezML to get 2500 free deployment credits: ezML Account Setup

Authorize ezML’s API: Find your client_key and client_secret in Settings.

API Configuration: Use the VQA API: VQA API Documentation

Embedding in Your Application: Query the API in your project!

For any help or questions please join our discord: ezML Discord

Applications and Use Cases

Llava’s VQA capabilities, combined with ezML, can revolutionize various sectors:

E-Commerce: Enhance customer service by answering queries about product images.

Medical Imaging: Provide insights into medical scans through descriptive language.

Educational Tools: Offer interactive learning experiences using image-based questions and answers.

Conclusion: The Future of VQA with Llava and ezML

The Llava Model, integrated with ezML’s platform, represents a significant advancement in VQA technology. By offering a detailed guide on implementing this VQA API, we aim to empower developers, businesses, and AI enthusiasts to harness this technology effectively, fulfilling their specific needs and objectives in the realm of visual question answering.